The post DBpedia Snapshot 2022-09 Release appeared first on DBpedia Association.

]]>News since DBpedia Snapshot 2022-03

- New Abstract Extractor due to GSOC 2022 (credits to Celian Ringwald)

- Work in progress: Smoothing the community issue reporting and fixing at Github

What is the “DBpedia Snapshot” Release?

Historically, this release has been associated with many names: “DBpedia Core”, “EN DBpedia”, and — most confusingly — just “DBpedia”. In fact, it is a combination of —

- EN Wikipedia data — A small, but very useful, subset (~ 1 Billion triples or 14%) of the whole DBpedia extraction using the DBpedia Information Extraction Framework (DIEF), comprising structured information extracted from the English Wikipedia plus some enrichments from other Wikipedia language editions, notably multilingual abstracts in ar, ca, cs, de, el, eo, es, eu, fr, ga, id, it, ja, ko, nl, pl, pt, sv, uk, ru, zh.

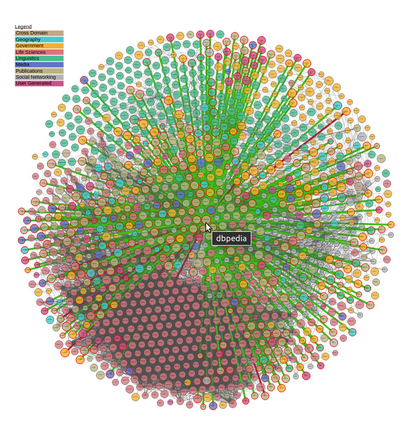

- Links — 62 million community-contributed cross-references and owl:sameAs links to other linked data sets on the Linked Open Data (LOD) Cloud that allow to effectively find and retrieve further information from the largest, decentral, change-sensitive knowledge graph on earth that has formed around DBpedia since 2007.

- Community extensions — Community-contributed extensions such as additional ontologies and taxonomies.

Release Frequency & Schedule

Going forward, releases will be scheduled for the 1th of February, May, August, and November (with +/- 5 days tolerance), and are named using the same date convention as the Wikipedia Dumps that served as the basis for the release. An example of the release timeline is shown below:

| September 6–8 | September 8–20 | September 20–November 1 | November 1 –November 15 |

| Wikipedia dumps for June 1 become available on https://dumps.wikimedia.org/ | Download and extraction with DIEF | Post-processing and quality-control period | Linked Data and SPARQL endpoint deployment |

Data Freshness

Given the timeline above, the EN Wikipedia data of DBpedia Snapshot has a lag of 1-4 months. We recommend the following strategies to mitigate this:

- DBpedia Snapshot as a kernel for Linked Data: Following the Linked Data paradigm, we recommend using the Linked Data links to other knowledge graphs to retrieve high-quality and recent information. DBpedia’s network consists of the best knowledge engineers in the world, working together, using linked data principles to build a high-quality, open, decentralized knowledge graph network around DBpedia. Freshness and change-sensitivity are two of the greatest data-related challenges of our time, and can only be overcome by linking data across data sources. The “Big Data” approach of copying data into a central warehouse is inevitably challenged by issues such as co-evolution and scalability.

- DBpedia Live: Wikipedia is unmistakenly the richest, most recent body of human knowledge and source of news in the world. DBpedia Live is just minutes behind edits on Wikipedia, which means that as soon as any of the 120k Wikipedia editors press the “save” button, DBpedia Live will extract fresh data and update. DBpedia Live is currently in tech preview status and we are working towards a high-available and reliable business API with support. DBpedia Live consists of the DBpedia Live Sync API (for syncing into any kind of on-site databases), Linked Data and SPARQL endpoint.

- Latest-Core is a dynamically updating Databus Collection. Our automated extraction robot “MARVIN” publishes monthly dev versions of the full extraction, which are then refined and enriched to become Snapshot.

Data Quality & Richness

We would like to acknowledge the excellent work of Wikipedia editors (~46k active editors for EN Wikipedia), who are ultimately responsible for collecting information in Wikipedia’s infoboxes, which are refined by DBpedia’s extraction into our knowledge graphs. Wikipedia’s infoboxes are steadily growing each month and according to our measurements grow by 150% every three years. EN Wikipedia’s inboxes even doubled in this timeframe. This richness of knowledge drives the DBpedia Snapshot knowledge graph and is further potentiated by synergies with linked data cross-references. Statistics are given below.

Data Access & Interaction Options

Linked Data

Linked Data is a principled approach to publishing RDF data on the Web that enables interlinking data between different data sources, courtesy of the built-in power of Hyperlinks as unique Entity Identifiers.

HTML pages comprising Hyperlinks that confirm to Linked Data Principles is one of the methods of interacting with data provided by the DBpedia Snapshot, be it manually via the web browser or programmatically using REST interaction patterns via https://dbpedia.org/resource/{entity-label} pattern. Naturally, we encourage Linked Data interactions, while also expecting user-agents to honor the cache-control HTTP response header for massive crawl operations. Instructions for accessing Linked Data, available in 10 formats.

SPARQL Endpoint

This service enables some astonishing queries against Knowledge Graphs derived from Wikipedia content. The Query Services Endpoint that makes this possible is identified by http://dbpedia.org/sparql, and it currently handles 7.2 million queries daily on average. See powerful queries and instructions (incl. rates and limitations).

An effective Usage Pattern is to filter a relevant subset of entity descriptions for your use case via SPARQL and then combine with the power of Linked Data by looking up (or de-referencing) data via owl:sameAs property links en route to retrieving specific and recent data from across other Knowledge Graphs across the massive Linked Open Data Cloud.

Additionally, DBpedia Snapshot dumps and additional data from the complete collection of datasets derived from Wikipedia are provided by the DBpedia Databus for use in your own SPARQL-accessible Knowledge Graphs.

DBpedia Ontology

This Snapshot Release was built with DBpedia Ontology (DBO) version: https://databus.dbpedia.org/ontologies/dbpedia.org/ontology–DEV/2021.11.08-124002 We thank all DBpedians for the contribution to the ontology and the mappings. See documentation and visualizations, class tree and properties, wiki.

DBpedia Snapshot Statistics

Overview. Overall the current Snapshot Release contains more than 850 million facts (triples).

At its core, the DBpedia ontology is the heart of DBpedia. Our community is continuously contributing to the DBpedia ontology schema and the DBpedia infobox-to-ontology mappings by actively using the DBpedia Mappings Wiki.

The current Snapshot Release utilizes a total of 55 thousand properties, whereas 1377 of these are defined by the DBpedia ontology.

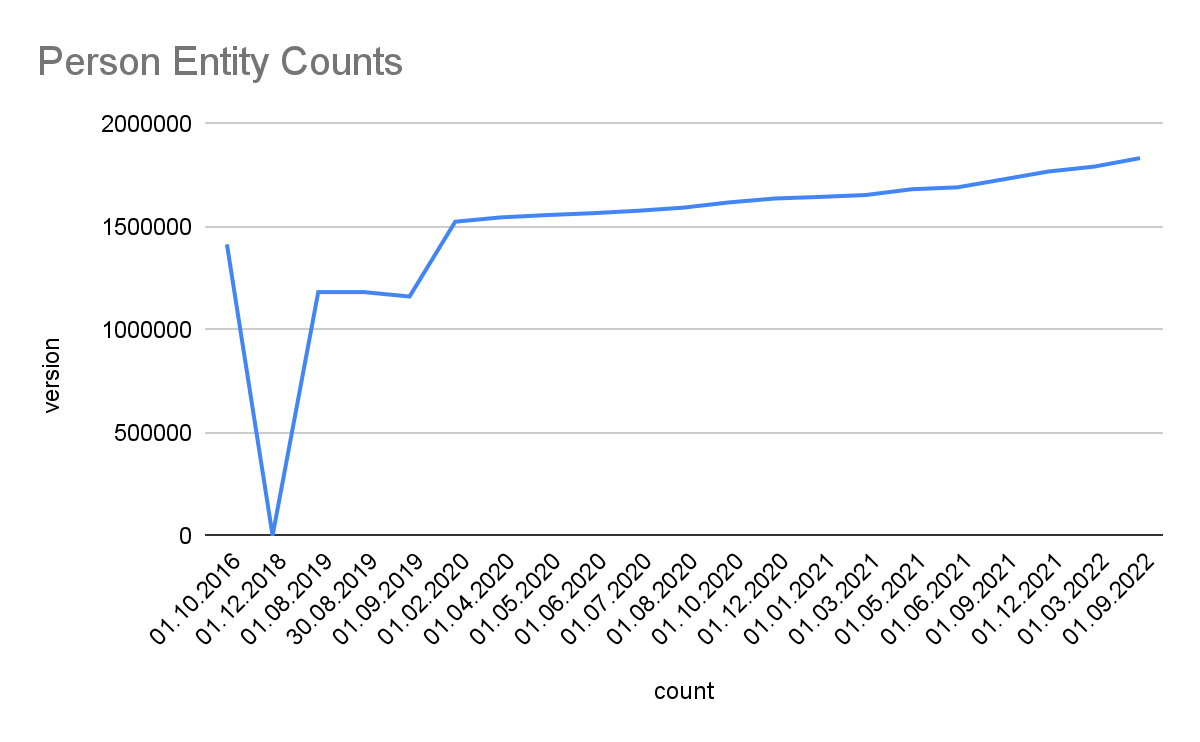

Classes. Knowledge in Wikipedia is constantly growing at a rapid pace. We use the DBpedia Ontology Classes to measure the growth: Total number in this release (in brackets we give: a) growth to the previous release, which can be negative temporarily and b) growth compared to Snapshot 2016-10):

- Persons: 1833500 (1.02%, 1.15%)

- Places: 757436 (1.01%, 1842.91%), including but not limited to 597548 (1.01%, 5584.56%) populated places

- Works 619280 (1.01%, 628.71%), including, but not limited to

- 158895 (1.01%, 1.40%) music albums

- 147192 (1.02%, 16.25%) films

- 25182 (1.01%, 12.71%) video games

- Organizations: 350329 (1.01%, 110.83%), including but not limited to

- 88722 (1.01%, 2.28%) companies

- 64094 (0.99%, 64094.00%) educational institutions

- Species: 1955775 (1.01%, 325962.50%)

- Plants: 4977 (0.64%, 1.10%)

- Diseases: 10837 (1.02%, 8.74%)

Detailed Growth of Classes: The image below shows the detailed growth for one class. Click on the links for other classes: Place, PopulatedPlace, Work, Album, Film, VideoGame, Organisation, Company, EducationalInstitution, Species, Plant, Disease. For further classes adapt the query by replacing the <http://dbpedia.org/ontology/CLASS> URI. Note, that 2018 was a development phase with some failed extractions. The stats were generated with the Databus VOID Mod.

Links. Linked Data cross-references between decentral datasets are the foundation and access point to the Linked Data Web. The latest Snapshot Release provides over 130.6 million links from 7.62 million entities to 179 external sources.

Top 11

###TOP11###

33,860,047 http://www.wikidata.org

7,147,970 https://global.dbpedia.org

4,308,772 http://yago-knowledge.org

3,832,100 http://de.dbpedia.org

3,704,534 http://fr.dbpedia.org

2,971,751 http://viaf.org

2,912,859 http://it.dbpedia.org

2,903,130 http://es.dbpedia.org

2,754,466 http://fa.dbpedia.org

2,571,787 http://sr.dbpedia.org

2,563,793 http://ru.dbpedia.org

Top 10 without DBpedia namespaces

###TOP10###

33,860,047 http://www.wikidata.org

4,308,772 http://yago-knowledge.org

2,971,751 http://viaf.org

1,687,386 http://d-nb.info

609,604 http://sws.geonames.org

596,134 http://umbel.org

533,320 http://data.bibliotheken.nl

430,839 http://www.w3.org

417,034 http://musicbrainz.org

104,433 http://linkedgeodata.org

DBpedia Extraction Dumps on the Databus

All extracted files are reachable via the DBpedia account on the Databus. The Databus has two main structures:

- In groups, artifacts, version, similar to software in Maven: https://databus.dbpedia.org/$user/$group/$artifact/$version/$filename

- In Databus collections that are SPARQL queries over https://databus.dbpedia.org/yasui filtering and selecting files from these artifacts.

Snapshot Download. For downloading DBpedia Snapshot, we prepared this collection, which also includes detailed releases notes: https://databus.dbpedia.org/dbpedia/collections/dbpedia-snapshot-2022-03

The collection is roughly equivalent to http://downloads.dbpedia.org/2016-10/core/

Collections can be downloaded in many different ways, some download modalities such as bash script, SPARQL, and plain URL list are found in the tabs at the collection. Files are provided as bzip2 compressed n-triples files. In case you need a different format or compression, you can also use the “Download-As” function of the Databus Client (GitHub), e.g. -s $collection -c gzip would download the collection and convert it to GZIP during download.

Replicating DBpedia Snapshot on your server can be done via Docker, see https://hub.docker.com/r/dbpedia/virtuoso-sparql-endpoint-quickstart

git clone https://github.com/dbpedia/virtuoso-sparql-endpoint-quickstart.git

cd virtuoso-sparql-endpoint-quickstart

COLLECTION_URI=https://databus.dbpedia.org/dbpedia/collections/dbpedia-snapshot-2022-09

VIRTUOSO_ADMIN_PASSWD=password docker-compose up

Download files from the whole DBpedia extraction. The whole extraction consists of approx. 20 Billion triples and 5000 files created from 140 languages of Wikipedia, Commons and Wikidata. They can be found in https://databus.dbpedia.org/dbpedia/(generic|mappings|text|wikidata)

You can copy-edit a collection and create your own customized (e.g.) collections via “Actions” -> “Copy Edit” , e.g. you can Copy Edit the snapshot collection above, remove some files that you do not need and add files from other languages. Please see the Rhizomer use case: Best way to download specific parts of DBpedia. Of course, this only refers to the archived dumps on the Databus for users who want to bulk download and deploy into their own infrastructure. Linked Data and SPARQL allow for filtering the content using a small data pattern.

Acknowledgments

First and foremost, we would like to thank our open community of knowledge engineers for finding & fixing bugs and for supporting us by writing data tests. We would also like to acknowledge the DBpedia Association members for constantly innovating the areas of knowledge graphs and linked data and pushing the DBpedia initiative with their know-how and advice. OpenLink Software supports DBpedia by hosting SPARQL and Linked Data; University Mannheim, the German National Library of Science and Technology (TIB) and the Computer Center of University Leipzig provide persistent backups and servers for extracting data. We thank Marvin Hofer and Mykola Medynskyi for technical preparation. This work was partially supported by grants from the Federal Ministry for Economic Affairs and Energy of Germany (BMWi) for the LOD-GEOSS Project (03EI1005E), as well as for the PLASS Project (01MD19003D).

The post DBpedia Snapshot 2022-09 Release appeared first on DBpedia Association.

]]>The post DBpedia Snapshot 2022-06 Release appeared first on DBpedia Association.

]]>You can still use the last Snapshot Release of version 2022-03.

We want to address the current problem and future solutions in the following.

We encountered several new issues, but the major problem is that the current version of DBpedias Abstract Extractor is no longer working. Wikimedia/Wikipedia seems to have increased the requests per second restrictions on their old API.

- Current API https://en.wikipedia.org/w/api.php

As a result, we could not extract any version of English abstracts for April, May, or June 2022 (not even thinking about the other 138 languages). We decided not to publish a mixed version with overlapping core data that is older than three months (e.g., abstracts and mapping-based data).

For a solution, a GSOC project was already promoted in early 2022 that specifies the task of improving abstract extraction. The project was accepted and is currently running. We tested several new promising strategies to implement a new Abstract Extractor.

- New API https://en.wikipedia.org/api/rest_v1/

- local deployment of Mediawiki for Wikitext-template parsing

We will give further status updates on this project in the future.

The announcement of the next Snapshot Release (2022-09) is scheduled for November the 1st.

The post DBpedia Snapshot 2022-06 Release appeared first on DBpedia Association.

]]>The post DBpedia Snapshot 2022-03 Release appeared first on DBpedia Association.

]]>News since DBpedia Snapshot 2021-12

- Release notes are now maintained in the Databus Collection (2022-03)

- Image and Abstract Extractor was improved

- Work in progress: Smoothing the community issue reporting and fixing at Github

What is the “DBpedia Snapshot” Release?

Historically, this release has been associated with many names: “DBpedia Core”, “EN DBpedia”, and — most confusingly — just “DBpedia”. In fact, it is a combination of —

- EN Wikipedia data — A small, but very useful, subset (~ 1 Billion triples or 14%) of the whole DBpedia extraction using the DBpedia Information Extraction Framework (DIEF), comprising structured information extracted from the English Wikipedia plus some enrichments from other Wikipedia language editions, notably multilingual abstracts in ar, ca, cs, de, el, eo, es, eu, fr, ga, id, it, ja, ko, nl, pl, pt, sv, uk, ru, zh.

- Links — 62 million community-contributed cross-references and owl:sameAs links to other linked data sets on the Linked Open Data (LOD) Cloud that allow to effectively find and retrieve further information from the largest, decentral, change-sensitive knowledge graph on earth that has formed around DBpedia since 2007.

- Community extensions — Community-contributed extensions such as additional ontologies and taxonomies.

Release Frequency & Schedule

Going forward, releases will be scheduled for the 15th of February, May, July, and October (with +/- 5 days tolerance), and are named using the same date convention as the Wikipedia Dumps that served as the basis for the release. An example of the release timeline is shown below:

| March 6–8 | March 8–20 | March 20–April 15 | April 15–May 1 |

| Wikipedia dumps for June 1 become available on https://dumps.wikimedia.org/ | Download and extraction with DIEF | Post-processing and quality-control period | Linked Data and SPARQL endpoint deployment |

Data Freshness

Given the timeline above, the EN Wikipedia data of DBpedia Snapshot has a lag of 1-4 months. We recommend the following strategies to mitigate this:

- DBpedia Snapshot as a kernel for Linked Data: Following the Linked Data paradigm, we recommend using the Linked Data links to other knowledge graphs to retrieve high-quality and recent information. DBpedia’s network consists of the best knowledge engineers in the world, working together, using linked data principles to build a high-quality, open, decentralized knowledge graph network around DBpedia. Freshness and change-sensitivity are two of the greatest data-related challenges of our time, and can only be overcome by linking data across data sources. The “Big Data” approach of copying data into a central warehouse is inevitably challenged by issues such as co-evolution and scalability.

- DBpedia Live: Wikipedia is unmistakenly the richest, most recent body of human knowledge and source of news in the world. DBpedia Live is just minutes behind edits on Wikipedia, which means that as soon as any of the 120k Wikipedia editors press the “save” button, DBpedia Live will extract fresh data and update. DBpedia Live is currently in tech preview status and we are working towards a high-available and reliable business API with support. DBpedia Live consists of the DBpedia Live Sync API (for syncing into any kind of on-site databases), Linked Data and SPARQL endpoint.

- Latest-Core is a dynamically updating Databus Collection. Our automated extraction robot “MARVIN” publishes monthly dev versions of the full extraction, which are then refined and enriched to become Snapshot.

Data Quality & Richness

We would like to acknowledge the excellent work of Wikipedia editors (~46k active editors for EN Wikipedia), who are ultimately responsible for collecting information in Wikipedia’s infoboxes, which are refined by DBpedia’s extraction into our knowledge graphs. Wikipedia’s infoboxes are steadily growing each month and according to our measurements grow by 150% every three years. EN Wikipedia’s inboxes even doubled in this time frame. This richness of knowledge drives the DBpedia Snapshot knowledge graph and is further potentiated by synergies with linked data cross-references. Statistics are given below.

Data Access & Interaction Options

Linked Data

Linked Data is a principled approach to publishing RDF data on the Web that enables interlinking data between different data sources, courtesy of the built-in power of Hyperlinks as unique Entity Identifiers.

HTML pages comprising Hyperlinks that confirm to Linked Data Principles is one of the methods of interacting with data provided by the DBpedia Snapshot, be it manually via the web browser or programmatically using REST interaction patterns via https://dbpedia.org/resource/{{entity-label} pattern. Naturally, we encourage Linked Data interactions, while also expecting user-agents to honor the cache-control HTTP response header for massive crawl operations. Instructions for accessing Linked Data, available in 10 formats.

SPARQL Endpoint

This service enables some astonishing queries against Knowledge Graphs derived from Wikipedia content. The Query Services Endpoint that makes this possible is identified by http://dbpedia.org/sparql, and it currently handles 7.2 million queries daily on average. See powerful queries and instructions (incl. rates and limitations).

An effective Usage Pattern is to filter a relevant subset of entity descriptions for your use case via SPARQL and then combine with the power of Linked Data by looking up (or de-referencing) data via owl:sameAs property links en route to retrieving specific and recent data from across other Knowledge Graphs across the massive Linked Open Data Cloud.

Additionally, DBpedia Snapshot dumps and additional data from the complete collection of datasets derived from Wikipedia are provided by the DBpedia Databus for use in your own SPARQL-accessible Knowledge Graphs.

DBpedia Ontology

This Snapshot Release was built with DBpedia Ontology (DBO) version: https://databus.dbpedia.org/ontologies/dbpedia.org/ontology–DEV/2021.11.08-124002 We thank all DBpedians for the contribution to the ontology and the mappings. See documentation and visualizations, class tree and properties, wiki.

DBpedia Snapshot Statistics

Overview. Overall the current Snapshot Release contains more than 850 million facts (triples).

At its core, the DBpedia ontology is the heart of DBpedia. Our community is continuously contributing to the DBpedia ontology schema and the DBpedia infobox-to-ontology mappings by actively using the DBpedia Mappings Wiki.

The current Snapshot Release utilizes a total of 55 thousand properties, whereas 1377 of these are defined by the DBpedia ontology.

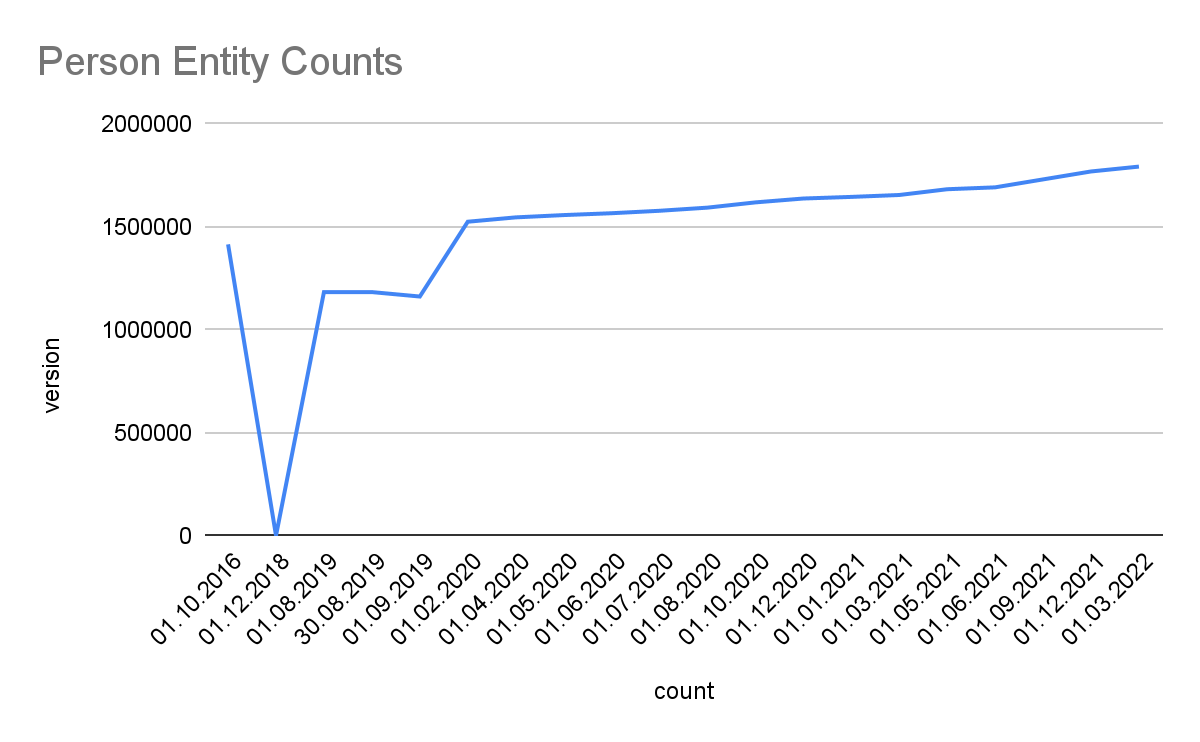

Classes. Knowledge in Wikipedia is constantly growing at a rapid pace. We use the DBpedia Ontology Classes to measure the growth: Total number in this release (in brackets we give: a) growth to the previous release, which can be negative temporarily and b) growth compared to Snapshot 2016-10):

- Persons: 1792308 (1.01%, 1.13%)

- Places: 748372 (1.00%, 1820.86%), including but not limited to 590481 (1.00%, 5518.51%) populated places

- Works 610589 (1.00%, 619.89%), including, but not limited to

- 157566 (1.00%, 1.38%) music albums

- 144415 (1.01%, 15.94%) films

- 24829 (1.01%, 12.53%) video games

- Organizations: 345523 (1.01%, 109.31%), including but not limited to

- 87621 (1.01%, 2.25%) companies

- 64507 (1.00%, 64507.00%) educational institutions

- Species: 1933436 (1.01%, 322239.33%)

- Plants: 7718 (0.82%, 1.71%)

- Diseases: 10591 (1.00%, 8.54%)

Detailed Growth of Classes: The image below shows the detailed growth for one class. Click on the links for other classes: Place, PopulatedPlace, Work, Album, Film, VideoGame, Organisation, Company, EducationalInstitution, Species, Plant, Disease. For further classes adapt the query by replacing the <http://dbpedia.org/ontology/CLASS> URI. Note, that 2018 was a development phase with some failed extractions. The stats were generated with the Databus VOID Mod.

Links. Linked Data cross-references between decentral datasets are the foundation and access point to the Linked Data Web. The latest Snapshot Release provides over 130.6 million links from 7.62 million entities to 179 external sources.

Top 11

###TOP11###

33,573,926 http://www.wikidata.org

7,005,750 https://global.dbpedia.org

4,308,772 http://yago-knowledge.org

3,768,764 http://de.dbpedia.org

3,642,704 http://fr.dbpedia.org

2,946,265 http://viaf.org

2,872,878,344 http://it.dbpedia.org

2,853,081 http://es.dbpedia.org

2,651,369 http://fa.dbpedia.org

2,552,761 http://sr.dbpedia.org

2,517,456 http://ru.dbpedia.org

Top 10 without DBpedia namespaces

###TOP10###

33,573,926 http://www.wikidata.org

4,308,772 http://yago-knowledge.org

2,946,265 http://viaf.org

1,633,862 http://d-nb.info

601,227 http://sws.geonames.org

596,134 http://umbel.org

528,123 http://data.bibliotheken.nl

430,839 http://www.w3.org

373,293 http://musicbrainz.org

104,433 http://linkedgeodata.org

DBpedia Extraction Dumps on the Databus

All extracted files are reachable via the DBpedia account on the Databus. The Databus has two main structures:

- In groups, artifacts, version, similar to software in Maven: https://databus.dbpedia.org/$user/$group/$artifact/$version/$filename

- In Databus collections that are SPARQL queries over https://databus.dbpedia.org/yasui filtering and selecting files from these artifacts.

Snapshot Download. For downloading DBpedia Snapshot, we prepared this collection, which also includes detailed releases notes: https://databus.dbpedia.org/dbpedia/collections/dbpedia-snapshot-2022-03

The collection is roughly equivalent to http://downloads.dbpedia.org/2016-10/core/

Collections can be downloaded in many different ways, some download modalities such as bash script, SPARQL, and plain URL list are found in the tabs at the collection. Files are provided as bzip2 compressed n-triples files. In case you need a different format or compression, you can also use the “Download-As” function of the Databus Client (GitHub), e.g. -s $collection -c gzip would download the collection and convert it to GZIP during download.

Replicating DBpedia Snapshot on your server can be done via Docker, , see https://hub.docker.com/r/dbpedia/virtuoso-sparql-endpoint-quickstart

git clone https://github.com/dbpedia/virtuoso-sparql-endpoint-quickstart.git

cd virtuoso-sparql-endpoint-quickstart

COLLECTION_URI=https://databus.dbpedia.org/dbpedia/collections/dbpedia-snapshot-2022-03VIRTUOSO_ADMIN_PASSWD=password docker-compose up

Download files from the whole DBpedia extraction. The whole extraction consists of approx. 20 Billion triples and 5000 files created from 140 languages of Wikipedia, Commons and Wikidata. They can be found in https://databus.dbpedia.org/dbpedia/(generic|mappings|text|wikidata)

You can copy-edit a collection and create your own customized (e.g.) collections via “Actions” -> “Copy Edit” , e.g. you can Copy Edit the snapshot collection above, remove some files that you do not need and add files from other languages. Please see the Rhizomer use case: Best way to download specific parts of DBpedia. Of course, this only refers to the archived dumps on the Databus for users who want to bulk download and deploy into their own infrastructure. Linked Data and SPARQL allow for filtering the content using a small data pattern.

Acknowledgments

First and foremost, we would like to thank our open community of knowledge engineers for finding & fixing bugs and for supporting us by writing data tests. We would also like to acknowledge the DBpedia Association members for constantly innovating the areas of knowledge graphs and linked data and pushing the DBpedia initiative with their know-how and advice. OpenLink Software supports DBpedia by hosting SPARQL and Linked Data; University Mannheim, the German National Library of Science and Technology (TIB) and the Computer Center of University Leipzig provide persistent backups and servers for extracting data. We thank Marvin Hofer and Mykola Medynskyi for technical preparation. This work was partially supported by grants from the Federal Ministry for Economic Affairs and Energy of Germany (BMWi) for the LOD-GEOSS Project (03EI1005E), as well as for the PLASS Project (01MD19003D).

The post DBpedia Snapshot 2022-03 Release appeared first on DBpedia Association.

]]>The post DBpedia Snapshot 2021-12 Release appeared first on DBpedia Association.

]]>News since DBpedia Snapshot 2021-09

+ Release notes are now maintained in the Databus Collection (2021-12)

+ Image and Abstract Extractor was improved

+ Work in progress: Smoothing the community issue reporting and fixing at Github

What is the “DBpedia Snapshot” Release?

Historically, this release has been associated with many names: “DBpedia Core”, “EN DBpedia”, and — most confusingly — just “DBpedia”. In fact, it is a combination of —

- EN Wikipedia data — A small, but very useful, subset (~ 1 Billion triples or 14%) of the whole DBpedia extraction using the DBpedia Information Extraction Framework (DIEF), comprising structured information extracted from the English Wikipedia plus some enrichments from other Wikipedia language editions, notably multilingual abstracts in ar, ca, cs, de, el, eo, es, eu, fr, ga, id, it, ja, ko, nl, pl, pt, sv, uk, ru, zh.

- Links — 62 million community-contributed cross-references and owl:sameAs links to other linked data sets on the Linked Open Data (LOD) Cloud that allow to effectively find and retrieve further information from the largest, decentral, change-sensitive knowledge graph on earth that has formed around DBpedia since 2007.

- Community extensions — Community-contributed extensions such as additional ontologies and taxonomies.

Release Frequency & Schedule

Going forward, releases will be scheduled for the 15th of January, April, June and September (with +/- 5 days tolerance), and are named using the same date convention as the Wikipedia Dumps that served as the basis for the release. An example of the release timeline is shown below:

| December 6–8 | Dec 8–20 | Dec 20–Jan 15 | Jan 15–Feb 8 |

| Wikipedia dumps for June 1 become available on https://dumps.wikimedia.org/ | Download and extraction with DIEF | Post-processing and quality-control period | Linked Data and SPARQL endpoint deployment |

Data Freshness

Given the timeline above, the EN Wikipedia data of DBpedia Snapshot has a lag of 1-4 months. We recommend the following strategies to mitigate this:

- DBpedia Snapshot as a kernel for Linked Data: Following the Linked Data paradigm, we recommend using the Linked Data links to other knowledge graphs to retrieve high-quality and recent information. DBpedia’s network consists of the best knowledge engineers in the world, working together, using linked data principles to build a high-quality, open, decentralized knowledge graph network around DBpedia. Freshness and change-sensitivity are two of the greatest data-related challenges of our time, and can only be overcome by linking data across data sources. The “Big Data” approach of copying data into a central warehouse is inevitably challenged by issues such as co-evolution and scalability.

- DBpedia Live: Wikipedia is unmistakenly the richest, most recent body of human knowledge and source of news in the world. DBpedia Live is just minutes behind edits on Wikipedia, which means that as soon as any of the 120k Wikipedia editors press the “save” button, DBpedia Live will extract fresh data and update. DBpedia Live is currently in tech preview status and we are working towards a high-available and reliable business API with support. DBpedia Live consists of the DBpedia Live Sync API (for syncing into any kind of on-site databases), Linked Data and SPARQL endpoint.

- Latest-Core is a dynamically updating Databus Collection. Our automated extraction robot “MARVIN” publishes monthly dev versions of the full extraction, which are then refined and enriched to become Snapshot.

Data Quality & Richness

We would like to acknowledge the excellent work of Wikipedia editors (~46k active editors for EN Wikipedia), who are ultimately responsible for collecting information in Wikipedia’s infoboxes, which are refined by DBpedia’s extraction into our knowledge graphs. Wikipedia’s infoboxes are steadily growing each month and according to our measurements grow by 150% every three years. EN Wikipedia’s inboxes even doubled in this timeframe. This richness of knowledge drives the DBpedia Snapshot knowledge graph and is further potentiated by synergies with linked data cross-references. Statistics are given below.

Data Access & Interaction Options

Linked Data

Linked Data is a principled approach to publishing RDF data on the Web that enables interlinking data between different data sources, courtesy of the built-in power of Hyperlinks as unique Entity Identifiers.

HTML pages comprising Hyperlinks that confirm to Linked Data Principles is one of the methods of interacting with data provided by the DBpedia Snapshot, be it manually via the web browser or programmatically using REST interaction patterns via https://dbpedia.org/resource/{entity-label} pattern. Naturally, we encourage Linked Data interactions, while also expecting user-agents to honor the cache-control HTTP response header for massive crawl operations. Instructions for accessing Linked Data, available in 10 formats.

SPARQL Endpoint

This service enables some astonishing queries against Knowledge Graphs derived from Wikipedia content. The Query Services Endpoint that makes this possible is identified by http://dbpedia.org/sparql, and it currently handles 7.2 million queries daily on average. See powerful queries and instructions (incl. rates and limitations).

An effective Usage Pattern is to filter a relevant subset of entity descriptions for your use case via SPARQL and then combine with the power of Linked Data by looking up (or de-referencing) data via owl:sameAs property links en route to retrieving specific and recent data from across other Knowledge Graphs across the massive Linked Open Data Cloud.

Additionally, DBpedia Snapshot dumps and additional data from the complete collection of datasets derived from Wikipedia are provided by the DBpedia Databus for use in your own SPARQL-accessible Knowledge Graphs.

DBpedia Ontology

This Snapshot Release was built with DBpedia Ontology (DBO) version: https://databus.dbpedia.org/ontologies/dbpedia.org/ontology–DEV/2021.11.08-124002 We thank all DBpedians for the contribution to the ontology and the mappings. See documentation and visualizations, class tree and properties, wiki.

DBpedia Snapshot Statistics

Overview. Overall the current Snapshot Release contains more than 850 million facts (triples).

At its core, the DBpedia ontology is the heart of DBpedia. Our community is continuously contributing to the DBpedia ontology schema and the DBpedia infobox-to-ontology mappings by actively using the DBpedia Mappings Wiki.

The current Snapshot Release utilizes a total of 55 thousand properties, whereas 1377 of these are defined by the DBpedia ontology.

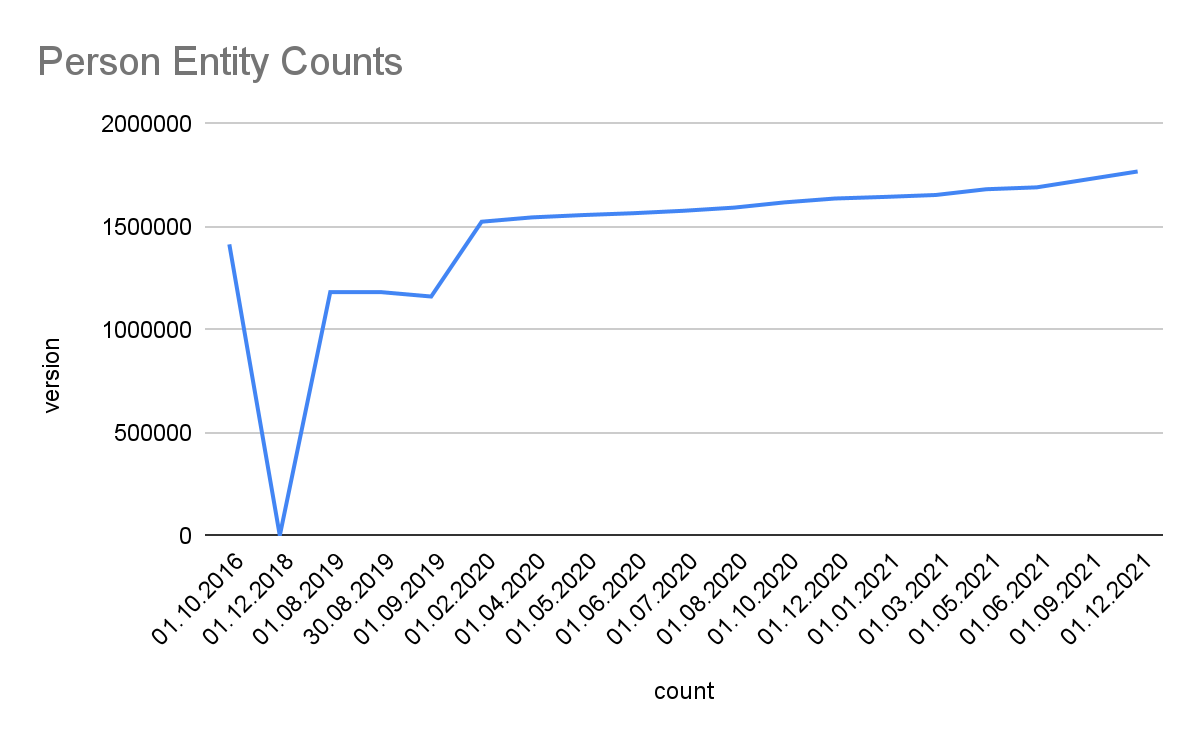

Classes. Knowledge in Wikipedia is constantly growing at a rapid pace. We use the DBpedia Ontology Classes to measure the growth: Total number in this release (in brackets we give: a) growth to the previous release, which can be negative temporarily and b) growth compared to Snapshot 2016-10):

- Persons: 1768613 (1.02%, 1.11%)

- Places: 745010 (1.01%, 1812.68%), including but not limited to 588283 (1.01%, 5497.97%) populated places

- Works 607852 (1.01%, 617.11%), including, but not limited to

- 157266 (1.00%, 1.38%) music albums

- 143193 (1.01%, 15.80%) films

- 24658 (1.01%, 12.44%) video games

- Organizations: 343109 (1.01%, 108.54%), including but not limited to

- 86950 (1.01%, 2.23%) companies

- 64539 (1.00%, 64539.00%) educational institutions

- Species: 1918094 (11.95%, 319682.33%)

- Plants: 9369 (0.89%, 2.07%)

- Diseases: 10553 (1.00%, 8.51%)

Detailed Growth of Classes: The image below shows the detailed growth for one class. Click on the links for other classes: Place, PopulatedPlace, Work, Album, Film, VideoGame, Organisation, Company, EducationalInstitution, Species, Plant, Disease. For further classes adapt the query by replacing the<http://dbpedia.org/ontology/CLASS> URI. Note, that 2018 was a development phase with some failed extractions. The stats were generated with the Databus VOID Mod.

Links. Linked Data cross-references between decentral datasets are the foundation and access point to the Linked Data Web. The latest Snapshot Release provides over 129.9 million links from 7.54 million entities to 179 external sources.

Top 11

###TOP11###

33,522,731 http://www.wikidata.org

6,924,866 https://global.dbpedia.org

4,308,772 http://yago-knowledge.org

3,742,122 http://de.dbpedia.org

3,617,112 http://fr.dbpedia.org

3,067,339 http://viaf.org

2,860,142 http://it.dbpedia.org

2,841,468 http://es.dbpedia.org

2,609,236 http://fa.dbpedia.org

2,549,967 http://sr.dbpedia.org

2,496,247 http://ru.dbpedia.org

Top 10 without DBpedia namespaces

###TOP10###

33,522,731 http://www.wikidata.org

4,308,772 http://yago-knowledge.org

3,067,339 http://viaf.org

1,831,098 http://d-nb.info

641,602 http://sws.geonames.org

596,134 http://umbel.org

524,267 http://data.bibliotheken.nl

430,839 http://www.w3.org

370,844 http://musicbrainz.org

106,498 http://linkedgeodata.org

DBpedia Extraction Dumps on the Databus

All extracted files are reachable via the DBpedia account on the Databus. The Databus has two main structures:

- In groups, artifacts, version, similar to software in Maven: https://databus.dbpedia.org/$user/$group/$artifact/$version/$filename

- In Databus collections that are SPARQL queries over https://databus.dbpedia.org/yasui filtering and selecting files from these artifacts.

Snapshot Download. For downloading DBpedia Snapshot, we prepared this collection, which also includes detailed releases notes: https://databus.dbpedia.org/dbpedia/collections/dbpedia-snapshot-2021-12

The collection is roughly equivalent to http://downloads.dbpedia.org/2016-10/core/.

Collections can be downloaded in many different ways, some download modalities such as bash script, SPARQL, and plain URL list are found in the tabs at the collection. Files are provided as bzip2 compressed n-triples files. In case you need a different format or compression, you can also use the “Download-As” function of the Databus Client (GitHub), e.g. –s $collection -c gzip would download the collection and convert it to GZIP during download.

Replicating DBpedia Snapshot on your server can be done via Docker, see https://hub.docker.com/r/dbpedia/virtuoso-sparql-endpoint-quickstart.

git clone https://github.com/dbpedia/virtuoso-sparql-endpoint-quickstart.git

cd virtuoso-sparql-endpoint-quickstart

COLLECTION_URI=https://databus.dbpedia.org/dbpedia/collections/dbpedia-snapshot-2021-12 VIRTUOSO_ADMIN_PASSWD=password docker-compose up

Download files from the whole DBpedia extraction. The whole extraction consists of approx. 20 Billion triples and 5000 files created from 140 languages of Wikipedia, Commons and Wikidata. They can be found in https://databus.dbpedia.org/dbpedia/(generic|mappings|text|wikidata)

You can copy-edit a collection and create your own customized (e.g.) collections via “Actions” -> “Copy Edit” , e.g. you can Copy Edit the snapshot collection above, remove some files that you do not need and add files from other languages. Please see the Rhizomer use case: Best way to download specific parts of DBpedia. Of course, this only refers to the archived dumps on the Databus for users who want to bulk download and deploy into their own infrastructure. Linked Data and SPARQL allow for filtering the content using a small data pattern.

Acknowledgments

First and foremost, we would like to thank our open community of knowledge engineers for finding & fixing bugs and for supporting us by writing data tests. We would also like to acknowledge the DBpedia Association members for constantly innovating the areas of knowledge graphs and linked data and pushing the DBpedia initiative with their know-how and advice.

OpenLink Software supports DBpedia by hosting SPARQL and Linked Data; University Mannheim, the German National Library of Science and Technology (TIB) and the Computer Center of University Leipzig provide persistent backups and servers for extracting data. We thank Marvin Hofer and Mykola Medynskyi for technical preparation. This work was partially supported by grants from the Federal Ministry for Economic Affairs and Energy of Germany (BMWi) for the LOD-GEOSS Project (03EI1005E), as well as for the PLASS Project (01MD19003D).

The post DBpedia Snapshot 2021-12 Release appeared first on DBpedia Association.

]]>The post DBpedia Snapshot 2021-09 Release appeared first on DBpedia Association.

]]>News since DBpedia Snapshot 2021-06

- Release notes are now maintained in the Databus Collection (2021-09)

- Image and Abstract Extractor was improved

- Work in progress: Smoothing the community issue reporting and fixing at Github

What is the “DBpedia Snapshot” Release?

Historically, this release has been associated with many names: “DBpedia Core”, “EN DBpedia”, and — most confusingly — just “DBpedia”. In fact, it is a combination of —

-

EN Wikipedia data — A small, but very useful, subset (~ 1 Billion triples or 14%) of the whole DBpedia extraction using the DBpedia Information Extraction Framework (DIEF), comprising structured information extracted from the English Wikipedia plus some enrichments from other Wikipedia language editions, notably multilingual abstracts in ar, ca, cs, de, el, eo, es, eu, fr, ga, id, it, ja, ko, nl, pl, pt, sv, uk, ru, zh.

-

Links — 62 million community-contributed cross-references and owl:sameAs links to other linked data sets on the Linked Open Data (LOD) Cloud that allow to effectively find and retrieve further information from the largest, decentral, change-sensitive knowledge graph on earth that has formed around DBpedia since 2007.

-

Community extensions — Community-contributed extensions such as additional ontologies and taxonomies.

Release Frequency & Schedule

Going forward, releases will be scheduled for the 15th of February, May, July, and October (with +/- 5 days tolerance), and are named using the same date convention as the Wikipedia Dumps that served as the basis for the release. An example of the release timeline is shown below:

| September 6–8 | Sep 8–20 | Sep 20–Oct 10 | Oct 10–20 |

| Wikipedia dumps for June 1 become available on https://dumps.wikimedia.org/ |

Download and extraction with DIEF | Post-processing and quality-control period | Linked Data and SPARQL endpoint deployment |

Data Freshness

Given the timeline above, the EN Wikipedia data of DBpedia Snapshot has a lag of 1-4 months. We recommend the following strategies to mitigate this:

-

DBpedia Snapshot as a kernel for Linked Data: Following the Linked Data paradigm, we recommend using the Linked Data links to other knowledge graphs to retrieve high-quality and recent information. DBpedia’s network consists of the best knowledge engineers in the world, working together, using linked data principles to build a high-quality, open, decentralized knowledge graph network around DBpedia. Freshness and change-sensitivity are two of the greatest data-related challenges of our time, and can only be overcome by linking data across data sources. The “Big Data” approach of copying data into a central warehouse is inevitably challenged by issues such as co-evolution and scalability.

-

DBpedia Live: Wikipedia is unmistakenly the richest, most recent body of human knowledge and source of news in the world. DBpedia Live is just minutes behind edits on Wikipedia, which means that as soon as any of the 120k Wikipedia editors press the “save” button, DBpedia Live will extract fresh data and update. DBpedia Live is currently in tech preview status and we are working towards a high-available and reliable business API with support. DBpedia Live consists of the DBpedia Live Sync API (for syncing into any kind of on-site databases), Linked Data and SPARQL endpoint.

- Latest-Core is a dynamically updating Databus Collection. Our automated extraction robot “MARVIN” publishes monthly dev versions of the full extraction, which are then refined and enriched to become Snapshot.

Data Quality & Richness

We would like to acknowledge the excellent work of Wikipedia editors (~46k active editors for EN Wikipedia), who are ultimately responsible for collecting information in Wikipedia’s infoboxes, which are refined by DBpedia’s extraction into our knowledge graphs. Wikipedia’s infoboxes are steadily growing each month and according to our measurements grow by 150% every three years. EN Wikipedia’s inboxes even doubled in this timeframe. This richness of knowledge drives the DBpedia Snapshot knowledge graph and is further potentiated by synergies with linked data cross-references. Statistics are given below.

Data Access & Interaction Options

Linked Data

Linked Data is a principled approach to publishing RDF data on the Web that enables interlinking data between different data sources, courtesy of the built-in power of Hyperlinks as unique Entity Identifiers.

HTML pages comprising Hyperlinks that confirm to Linked Data Principles is one of the methods of interacting with data provided by the DBpedia Snapshot, be it manually via the web browser or programmatically using REST interaction patterns via https://dbpedia.org/resource/{entity-label} pattern. Naturally, we encourage Linked Data interactions, while also expecting user-agents to honor the cache-control HTTP response header for massive crawl operations. Instructions for accessing Linked Data, available in 10 formats.

SPARQL Endpoint

This service enables some astonishing queries against Knowledge Graphs derived from Wikipedia content. The Query Services Endpoint that makes this possible is identified by http://dbpedia.org/sparql, and it currently handles 7.2 million queries daily on average. See powerful queries and instructions (incl. rates and limitations).

An effective Usage Pattern is to filter a relevant subset of entity descriptions for your use case via SPARQL and then combine with the power of Linked Data by looking up (or de-referencing) data via owl:sameAs property links en route to retrieving specific and recent data from across other Knowledge Graphs across the massive Linked Open Data Cloud.

Additionally, DBpedia Snapshot dumps and additional data from the complete collection of datasets derived from Wikipedia are provided by the DBpedia Databus for use in your own SPARQL-accessible Knowledge Graphs.

DBpedia Ontology

This Snapshot Release was built with DBpedia Ontology (DBO) version: https://databus.dbpedia.org/ontologies/dbpedia.org/ontology–DEV/2021.07.09-070001 We thank all DBpedians for the contribution to the ontology and the mappings. See documentation and visualizations, class tree and properties, wiki.

DBpedia Snapshot Statistics

Overview. Overall the current Snapshot Release contains more than 850 million facts (triples).

At its core, the DBpedia ontology is the heart of DBpedia. Our community is continuously contributing to the DBpedia ontology schema and the DBpedia infobox-to-ontology mappings by actively using the DBpedia Mappings Wiki.

The current Snapshot Release utilizes a total of 55 thousand properties, whereas 1377 of these are defined by the DBpedia ontology.

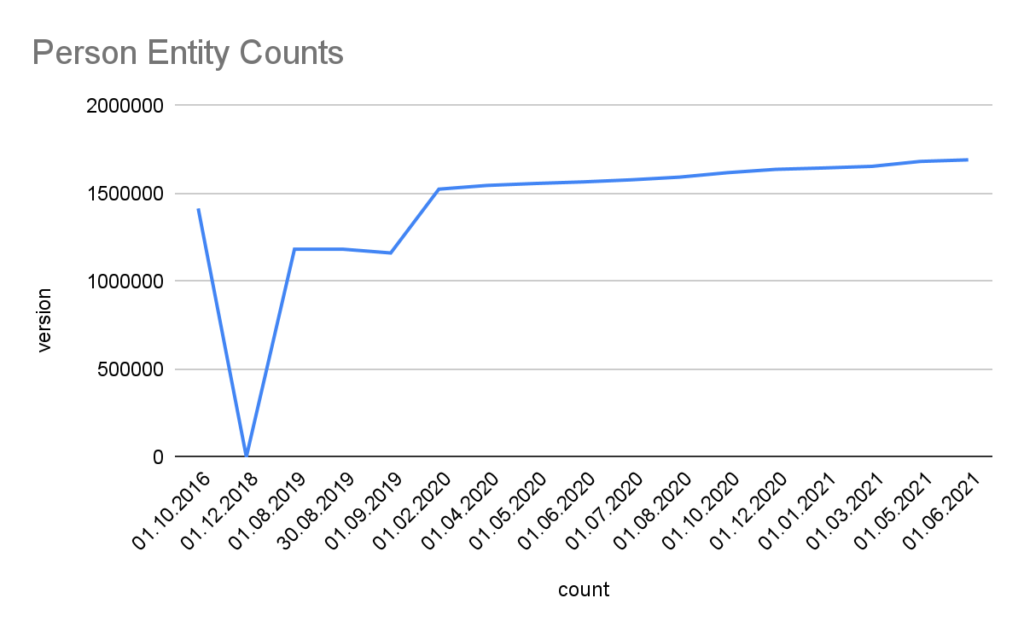

Classes. Knowledge in Wikipedia is constantly growing at a rapid pace. We use the DBpedia Ontology Classes to measure the growth: Total number in this release (in brackets we give: a) growth to the previous release, which can be negative temporarily and b) growth compared to Snapshot 2016-10):

-

Persons: 1,730,033 (2.28%, 8.85%)

-

Places: 737,512 (-25.64%, -11.42%),

including but not limited to 582,191 (-0.14%, 13.35%) populated

places -

Works 603,110 (1.34%, 21.58%), including,

but not limited to-

157,137 (1.94%, 38.02%) music albums

-

142,135 (0.75%, 1466.74%) films

-

24,452 (0.85%, 1133.70%) video

games

-

-

Organizations:

339,927 (-0.13%, -42.35%), including but not limited to-

85,726 (1.20%, 59.79%) companies

-

64,474 (1.01%, 17.68%)

educational institutions

-

-

Species: 160,535 (-3.71%, -46.47%)

-

Plants: 10,509 (-9.41%, 8.10%)

-

Diseases: 10,512 (-9.39%, 747.74%)

Detailed Growth of Classes: The image below shows the detailed growth for one class. Click on the links for other classes: Place, PopulatedPlace, Work, Album, Film, VideoGame, Organisation, Company, EducationalInstitution, Species, Plant, Disease. For further classes adapt the query by replacing the <http://dbpedia.org/ontology/CLASS> URI. Note, that 2018 was a development phase with some failed extractions. The stats were generated with the Databus VOID Mod.

Links. Linked Data cross-references between decentral datasets are the foundation and access point to the Linked Data Web. The latest Snapshot Release provides over 127.8 million links from 7.47 million entities to 179 external sources.

Top 11

33,403,279 www.wikidata.org

6,847,067 global.dbpedia.org

4,308,772 yago-knowledge.org

3,712,468 de.dbpedia.org

3,589,032 fr.dbpedia.org

2,917,799 viaf.org

2,841,527 it.dbpedia.org

2,816,382 es.dbpedia.org

2,567,507 fa.dbpedia.org

2,542,619 sr.dbpedia.org

Top 10 without DBpedia namespaces

33,403,279 www.wikidata.org

4,308,772 yago-knowledge.org

2,917,799 viaf.org

1,614,381 d-nb.info

596,134 umbel.org

581,558 sws.geonames.org

521,985 data.bibliotheken.nl

430,839 www.w3.org

369,309 musicbrainz.org

104,433 linkedgeodata.org

DBpedia Extraction Dumps on the Databus

All extracted files are reachable via the DBpedia account on the Databus. The Databus has two main structures:

- In groups, artifacts, version, similar to software in Maven: https://databus.dbpedia.org/$user/$group/$artifact/$version/$filename

- In Databus collections that are SPARQL queries over https://databus.dbpedia.org/yasui filtering and selecting files from these artifacts.

Snapshot Download. For downloading DBpedia Snapshot, we prepared this collection, which also includes detailed releases notes: https://databus.dbpedia.org/dbpedia/collections/dbpedia-snapshot-2021-06 The collection is roughly equivalent to http://downloads.dbpedia.org/2016-10/core/

Collections can be downloaded in many different ways, some download modalities such as bash script, SPARQL, and plain URL list are found in the tabs at the collection. Files are provided as bzip2 compressed n-triples files. In case you need a different format or compression, you can also use the “Download-As” function of the Databus Client (GitHub), e.g. –s $collection -c gzip would download the collection and convert it to GZIP during download.

Replicating DBpedia Snapshot on your server can be done via Docker, see https://hub.docker.com/r/dbpedia/virtuoso-sparql-endpoint-quickstart

git clone https://github.com/dbpedia/virtuoso-sparql-endpoint-quickstart.git

cd virtuoso-sparql-endpoint-quickstart

COLLECTION_URI=https://databus.dbpedia.org/dbpedia/collections/dbpedia-snapshot-2021-09 VIRTUOSO_ADMIN_PASSWD=password docker-compose up

Download files from the whole DBpedia extraction. The whole extraction consists of approx. 20 Billion triples and 5000 files created from 140 languages of Wikipedia, Commons and Wikidata. They can be found in https://databus.dbpedia.org/dbpedia/(generic|mappings|text|wikidata)

You can copy-edit a collection and create your own customized (e.g.) collections via “Actions” -> “Copy Edit” , e.g. you can Copy Edit the snapshot collection above, remove some files that you do not need and add files from other languages. Please see the Rhizomer use case: Best way to download specific parts of DBpedia. Of course, this only refers to the archived dumps on the Databus for users who want to bulk download and deploy into their own infrastructure. Linked Data and SPARQL allow for filtering the content using a small data pattern.

Acknowledgments

First and foremost, we would like to thank our open community of knowledge engineers for finding & fixing bugs and for supporting us by writing data tests. We would also like to acknowledge the DBpedia Association members for constantly innovating the areas of knowledge graphs and linked data and pushing the DBpedia initiative with their know-how and advice. OpenLink Software supports DBpedia by hosting SPARQL and Linked Data; University Mannheim, the German National Library of Science and Technology (TIB) and the Computer Center of University Leipzig provide persistent backups and servers for extracting data. We thank Marvin Hofer and Mykola Medynskyi for technical preparation. This work was partially supported by grants from the Federal Ministry for Economic Affairs and Energy of Germany (BMWi) for the LOD-GEOSS Project (03EI1005E), as well as for the PLASS Project (01MD19003D).

The post DBpedia Snapshot 2021-09 Release appeared first on DBpedia Association.

]]>The post Announcement: DBpedia Snapshot 2021-06 Release appeared first on DBpedia Association.

]]>DBpedia Snapshot 2021-06 Release

We are pleased to announce immediate availability of a new edition of the free and publicly accessible SPARQL Query Service Endpoint and Linked Data Pages, for interacting with the new Snapshot Dataset.

What is the “DBpedia Snapshot” Release?

Historically, this release has been associated with many names: “DBpedia Core”, “EN DBpedia”, and — most confusingly — just “DBpedia”. In fact, it is a combination of —

-

EN Wikipedia data — A small, but very useful, subset (~ 1 Billion triples or 14%) of the whole DBpedia extraction using the DBpedia Information Extraction Framework (DIEF), comprising structured information extracted from the English Wikipedia plus some enrichments from other Wikipedia language editions, notably multilingual abstracts in ar, ca, cs, de, el, eo, es, eu, fr, ga, id, it, ja, ko, nl, pl, pt, sv, uk, ru, zh .

-

Links — 62 million community-contributed cross-references and owl:sameAs links to other linked data sets on the Linked Open Data (LOD) Cloud that allow to effectively find and retrieve further information from the largest, decentral, change-sensitive knowledge graph on earth that has formed around DBpedia since 2007.

-

Community extensions — Community-contributed extensions such as additional ontologies and taxonomies.

Release Frequency & Schedule

Going forward, releases will be scheduled for the 15th of February, May, July, and October (with +/- 5 days tolerance), and are named using the same date convention as the Wikipedia Dumps that served as the basis for the release. An example of the release timeline is shown below:

|

June |

June 8–20 |

June 20–July 10 |

July 10–20 |

|

Wikipedia |

Download |

Post-processing |

Linked |

Data Freshness

Given the timeline above, the EN Wikipedia data of DBpedia Snapshot has a lag of 1-4 months. We recommend the following strategies to mitigate this:

-

DBpedia Snapshot as a kernel for Linked Data: Following the Linked Data paradigm, we recommend using the Linked Data links to other knowledge graphs to retrieve high-quality and recent information. DBpedia’s network consists of the best knowledge engineers in the world, working together, using linked data principles to build a high-quality, open, decentralized knowledge graph network around DBpedia. Freshness and change-sensitivity are two of the greatest data related challenges of our time, and can only be overcome by linking data across data sources. The “Big Data” approach of copying data into a central warehouse is inevitably challenged by issues such as co-evolution and scalability.

-

DBpedia Live: Wikipedia is unmistakingly the richest, most recent body of human knowledge andsource of news in the world. DBpedia Live is just behind edits on Wikipedia, which means that as soon as any of the 120k Wikipedia editors presses the “save” button, DBpedia Live will extract fresh data and update. DBpedia Live is currently in tech preview status and we are working towards a high-available and reliable business API with support. DBpedia Live consists of the DBpedia Live Sync API (for syncing into any kind of on-site databases), Linked Data and SPARQL endpoint.

-

Latest-Core is a dynamically updating Databus Collection. Our automated extraction robot “MARVIN” publishes monthly dev versions of the full extraction, which are then refined and enriched to become Snapshot.

Data Quality & Richness

We would like to acknowledge the excellent work of Wikipedia editors (~46k active editors for EN Wikipedia), who are ultimately responsible for collecting information in Wikipedia’s infoboxes, which are refined by DBpedia’s extraction into our knowledge graphs. Wikipedia’s infoboxes are steadily growing each month and according to our measurements grow by 150% every three years. EN Wikipedia’s inboxes even doubled in this timeframe. This richness of knowledge drives the DBpedia Snapshot knowledge graph and is further potentiated by synergies with linked data cross-references. Statistics are given below.

Data Access & Interaction Options

Linked Data

Linked Data is a principled approach to publishing RDF data on the Web that enables interlinking data between different data sources, courtesy of the built-in power of Hyperlinks as unique Entity Identifiers.

HTML pages comprising Hyperlinks that confirm to Linked Data Principles is one of the methods of interacting with data provided by the DBpedia Snapshot, be it manually via the web browser or programmatically using REST interaction patterns via https://dbpedia.org/resource/{entity-label} pattern. Naturally, we encourage Linked Data interactions, while also expecting user-agents to honor the cache-control HTTP response header for massive crawl operations. Instructions for accessing Linked Data, available in 10 formats.

SPARQL Endpoint

This service enables some astonishing queries against Knowledge Graphs derived from Wikipedia content. The Query Services Endpoint that makes this possible is identified by http://dbpedia.org/sparql, and it currently handles 7.2 million queries daily on average. See powerful queries and instructions (incl. rates and limitations).

An effective Usage Pattern is to filter a relevant subset of entity descriptions for your use case via SPARQL and then combine with the power of Linked Data by looking up (or de-referencing) data via owl:sameAs property links en route to retrieving specific and recent data from across other Knowledge Graphs across the massive Linked Open Data Cloud.

Additionally, DBpedia Snapshot dumps and additional data from the complete collection of datasets derived form Wikipedia are provided by the DBpedia Databus for use in your own SPARQL-accessible Knowledge Graphs.

DBpedia Ontology

This Snapshot Release was built with DBpedia Ontology (DBO) version: https://databus.dbpedia.org/ontologies/dbpedia.org/ontology–DEV/2021.07.09-070001 We thank all DBpedians for the contribution to the ontology and the mappings. See documentation and visualisations , class tree and properties, wiki.

DBpedia Snapshot Statistics

Overview. Overall the current Snapshot Release contains more than 850 million facts (triples).

At its core, the DBpedia ontology is the heart of DBpedia. Our community is continuously contributing to the DBpedia ontology schema and the DBpedia infobox-to-ontology mappings by actively using the DBpedia Mappings Wiki.

The current Snapshot Release utilizes a total of 55 thousand properties, whereas 1372 of these are defined by the DBpedia ontology.

Classes. Knowledge in Wikipedia is constantly growing at a rapid pace. We use the DBpedia Ontology Classes to measure the growth: Total number in this release (in brackets we give: a) growth to previous release, which can be negative temporarily and b) growth compared to Snapshot 2016-10):

-

Persons: 1682299 (1.69%, 5.84%)

-

Places: 992381 (0.44%, 2413%), including but not limited to 584938 (-0.0%, 5465%) populated places

-

Works 593689 (0.54%, 6017%), including, but not limited to

-

153984 (-0.7%, 35.2%) music albums

-

140766 (0.79%, 1453%) films

-

24198 (1.17%, 1120%) video games

-

-

Organizations: 341609 (0.69%, 1070%), including but not limited to

-

84464 (0.61%, 116.%) companies

-

63809 (0.17%, 6380%) educational institutions

-

-

Species: 169874 (-5.9%, 2831%)

-

Plants: 11952 (-15.%, 164.%)

-

Diseases: 8637 (0.16%, 596.%)

Detailed Growth of Classes: The image below shows the detailed growth for one class. Click on the links for other classes: Place, PopulatedPlace, Work, Album, Film, VideoGame, Organisation, Company, EducationalInstitution, Species, Plant, Disease. For further classes adapt the query by replacing the <http://dbpedia.org/ontology/CLASS> URI. Note, that 2018 was a development phase with some failed extractions. The stats were generated with the Databus VOID Mod.

Links. Linked Data cross-references between decentral datasets are the foundation and access point to the Linked Data Web. The latest Snapshot Release provides over 61.7 million links from 6.67 million entities to 178 external sources.

Top 11

6,672,052 global.dbpedia.org

5,380,836 www.wikidata.org

4,308,772 yago-knowledge.org

2,561,963 viaf.org

1,989,632 fr.dbpedia.org

1,851,182 de.dbpedia.org

1,563,230 it.dbpedia.org

1,495,866 es.dbpedia.org

1,283,672 pl.dbpedia.org

1,274,002 ru.dbpedia.org

1,203,247 d-nb.info

Top 10 without DBpedia namespaces

5,380,836 www.wikidata.org

4,308,772 yago-knowledge.org

2,561,963 viaf.org

1,203,247 d-nb.info

596,134 umbel.org

559,821 sws.geonames.org

431,830 data.bibliotheken.nl

430,839 www.w3.org (wn20)

301,483 musicbrainz.org

104,433 linkedgeodata.org

DBpedia Extraction Dumps on the Databus

All extracted files are reachable via the DBpedia account on the Databus. The Databus has two main structures:

- In groups, artifacts, version, similar to software in Maven: https://databus.dbpedia.org/$user/$group/$artifact/$version/$filename

- In Databus collections that are SPARQL queries over https://databus.dbpedia.org/yasui filtering and selecting files from these artifacts.

Snapshot Download. For downloading DBpedia Snapshot, we prepared this collection, which also includes detailed releases notes:

https://databus.dbpedia.org/dbpedia/collections/dbpedia-snapshot-2021-06

The collection is roughly equivalent to http://downloads.dbpedia.org/2016-10/core/

Collections can be downloaded many different ways, some download modalities such as bash script, SPARQL and plain URL list are found in the tabs at the collection. Files are provided as bzip2 compressed n-triples files. In case you need a different format or compression, you can

also use the “Download-As” function of the Databus Client (GitHub), e.g. -s$collection -c gzip would download the collection and convert it to GZIP during download.

Replicating DBpedia Snapshot on your server can be done via Docker, see https://hub.docker.com/r/dbpedia/virtuoso-sparql-endpoint-quickstart

git clone https://github.com/dbpedia/virtuoso-sparql-endpoint-quickstart.git

cdvirtuoso-sparql-endpoint-quickstart

COLLECTION_URI= https://databus.dbpedia.org/dbpedia/collections/dbpedia-snapshot2021-06

VIRTUOSO_ADMIN_PASSWD=password docker-compose up

Download files from the whole DBpedia extraction. The whole extraction consists of approx. 20 Billion triples and 5000 files created from 140 languages of Wikipedia, Commons and Wikidata. They can be found in https://databus.dbpedia.org/dbpedia/(generic|mappings|text|wikidata)

You can copy-edit a collection and create your own customized (e.g.) collections via “Actions” -> “Copy Edit” , e.g. you can Copy Edit the snapshot collection above, remove some files that you do not need and add files from other languages. Please see the Rhizomer use case: Best way to download specific parts of DBpedia. Of course, this only refers to the archived dumps on the Databus for users who want to bulk download and deploy into their own infrastructure. Linked Data and SPARQL allow for filtering the content using a small data pattern.

Acknowledgements

First and foremost, we would like to thank our open community of knowledge engineers for finding & fixing bugs and for supporting us by writing data tests. We would also like to acknowledge the DBpedia Association members for constantly innovating the areas of knowledge graphs and linked data and pushing the DBpedia initiative with their know-how and advice. OpenLink Software supports DBpedia by hosting SPARQL and Linked Data; University Mannheim, the German National Library of Science and Technology (TIB) and the Computer Center of University Leipzig provide persistent backups and servers for extracting data. We thank Marvin Hofer and Mykola Medynskyi for the technical preparation, testing and execution of this release. This work was partially supported by grants from the Federal Ministry for Economic Affairs and Energy of Germany (BMWi) for the LOD-GEOSS Project (03EI1005E), as well as for the PLASS Project (01MD19003D).

The post Announcement: DBpedia Snapshot 2021-06 Release appeared first on DBpedia Association.

]]>The post DBpedia Global: Data Beyond Wikipedia appeared first on DBpedia Association.

]]>

Since 2007, we’ve been extracting, mapping and linking content from Wikipedia into what is generally known as the DBpedia Snapshot that provided the kernel for what is known today as the LOD Cloud Knowledge Graph.

Today, we are launching DBpedia Global, a more powerful kernel for LOD Cloud Knowledge Graph that ultimately strengthens the utility of Linked Data principles by adding more decentralization i.e., broadening the scope of Linked Data associated with DBpedia. Think of this as “DBpedia beyond Wikipedia” courtesy of additional reference data from various sources.

DBpedia Global (DBpedia beyond Wikipedia) Benefits?

It provides enhanced discovery support by way of backlinks from DBpedia and triangulation links (transitive closure) between nodes in this new LOD Cloud Knowledge Graph kernel.

Ontologies and schemas are mapped to the DBpedia Ontology as exemplified by Wikipedia’s Infoboxes treatment in mappings.dbpedia.org. All with the intent of enhancing findability and subsequent use of a broader Knowledge Graph just as it exists today for the DBpedia Snapshot.

The general objective of this endeavor is to improve link density and quality, have access to archive dumps and structured data in a variety of formats, cross-vocabulary integration, and enhanced findability of relevant and up to date Linked Data associated with the trillions of entities across the massive LOD Cloud Knowledge Graph — as and whenever you need it (i.e., just-in-time and most importantly “hot cache rather than copy”).

DBpedia Newsletter and Semantics Conference 2021

We will be using the DBpedia newsletter and blog to keep you updated on the progress of individual aspects of DBpedia Global such as DBpedia Archivo, DBpedia Live API 2.0, DBpedia Live Instance, and Mods (free VOID generator services). Subscribe to our Newsletter for the latest news and information around DBpedia.

Also detailed discussion will happen at the DBpedia Meeting on September 9, 2021 at SEMANTiCS.

Stay safe!

The post DBpedia Global: Data Beyond Wikipedia appeared first on DBpedia Association.

]]>The post DBpedia Live Restart – Getting Things Done appeared first on DBpedia Association.

]]>DBpedia Live is a long term core project of DBpedia that immediately extracts fresh triples from all changed Wikipedia articles. After a long hiatus, fresh and live updated data is available once again, thanks to our former co-worker Lena Schindler whose work we feature in this blog post. Before we dive into Lena’s report, let’s have a look at some general info about DBpedia Live:

Live Enterprise Version

OpenLink Software provides a scalable, dedicated, live Virtuoso instance, built on Lena’s remastering. Kingsley Idehen announced the dedicated business service in our new DBpedia forum. .

On the Databus, we collect publicly shared and business-ready dedicated services in the same place where you can download the data. Databus allows you to download the data, build a service, and offer that service, all in one place. Data up-loaders can also see who builds something with their data

Remastering the DBpedia Live Module

Contribution by Lena Schindler

After developing the DBpedia REST API as part of a student project in 2018, I worked as a student Research Assistant for DBpedia. My task was to analyze and patch severe issues in the DBpedia Live instance. I will shortly describe the purpose of DBpedia Live, the reasons it went out of service, what I did to fix these, and finally, the changes needed to support multi-language abstract extraction.

Overview

The DBpedia Extraction Framework is Scala-based software with numerous features that have evolved around extracting knowledge (as RDF) from Wikis. One part is the DBpedia Live module in the “live-deployed” branch, which is intended to provide a continuously updated version of DBpedia by processing Wikipedia pages on demand, immediately after they have been modified by a user. The backbone of this module is a queue that is filled with recently edited Wikipedia pages, combined with a relational database, called Live Cache, that handles the diff between two consecutive versions of a page. The module that fills the queue, called Feeder, needs some kind of connection to a Wiki instance that reports changes to a Wiki Page. The processing then takes place in four steps:

- A wiki page is taken out of the queue.

- Triples are extracted from the page, with a given set of extractors.

- The new triples from the page are compared to the old triples from the Live Cache.

- The triple sets that have been deleted and added are published as text files, and the Cache is updated.

Background

DBpedia Live has been out of service since May 2018, due to the termination of the Wikimedia RCStream Service, upon which the old DBpedia Live Feeder module relied. This socket-based service provided information about changes to an existing Wikimedia instance and was replaced by the EventStreams service, which runs over a single HTTP connection using chunked transfer encoding, and is following the Server-Sent Event (SSE) protocol. It provides a stream of events, each of which contains information about title, id, language, author, and time of every page edit of all Wikimedia instances.

Fix

Starting in September 2018, my first task was to implement a new Feeder for DBpedia Live that is based on this new Wikimedia EventStreams Service. For the Java world, the Akka framework provides an implementation of a SSE client. Akka is a toolkit developed by Lightbend. It simplifies the construction of concurrent and distributed JVM applications, enabling both Java and Scala access. The Akka SSE client and the Akka Streams module are used in the new EventStreamsFeeder (Akka Helper) to extract and process the data stream. I decided to use Scala instead of Java, because it is a more natural fit to Akka.

After I was able to process events, I had the problem that frequent interruptions in the upstream connection were causing the processing stream to fail. Luckily, Akka provides a fallback mechanism with back-off, similar to the Binary Exponential Backoff of the Ethernet protocol which I could use to restart the stream (called “Graph” in Akka terminology).

Another problem was that in many cases, there were many changes to a page within a short time interval, and if events were processed quickly enough, each change would be processed separately, stressing the Live Instance with unnecessary load. A simple “thread sleep” reduced the number of change-sets being published every hour from thousands to a few hundred.

Multi-language abstracts

The next task was to prepare the Live module for the extraction of abstracts (typically the first paragraph of a page, or the text before the table of contents). The extractors used for this task were re-implemented in 2017. It turned out to be a configuration issue first, and second a candidate for long debugging sessions, fixing issues in the dependencies between the “live” and “core” modules. Then, in order to allow the extraction of abstracts in multiple languages, the “live” module needed many small changes, at places spread across the code-base, and care had to be taken not to slow down the extraction in the single language case, compared to the performance before the change. Deployment was delayed by an issue with the remote management unit of the production server, but was accomplished by May 2019.

Summary

I also collected my knowledge of the Live module in detailed documentation, addressed to developers who want to contribute to the code. This includes an explanation of the architecture as well as installation instructions. After 400 hours of work, DBpedia Live is alive and kicking, and now supports multi-language abstract extraction. Being responsible for many aspects of Software Engineering, like development, documentation, and deployment, I was able to learn a lot about DBpedia and the Semantic Web, hone new skills in database development and administration, and expand my programming experience using Scala and Akka.

“Thanks a lot to the whole DBpedia Team who always provided a warm and supportive environment!”

Thank you Lena, it is people like you who help DBpedia improve and develop further, and help to make data networks a reality.

Follow DBpedia on LinkedIn, Twitter or Facebook and stop by the DBpedia Forum to check out the latest discussions.

Yours DBpedia Association

The post DBpedia Live Restart – Getting Things Done appeared first on DBpedia Association.

]]>